NSX-T 3.0 Design Bootcamp 3:NSX Manager 1-2

In this blog: NSX-T 3.0 Design Bootcamp 3:NSX Manager 1-2, Let’s continue with our discussion on NSX-T manager. To start with, we will talk about one of the basic topics lost in the transition part of our previous article which is interoperability. Sure we will debate on this and also below are the few lists to think though.

- Interoperability and sizing

- NSX-T manager single site design considerations

NSX-T Manager Interoperability

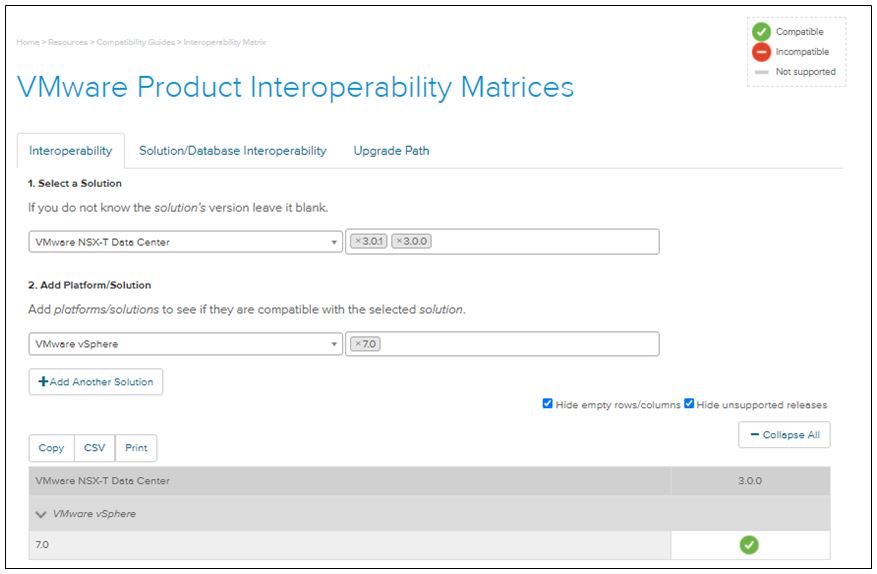

Importantly, the ability of the NSX-T is no more limited to VMware stack; you can also have the KVM hypervisors part of it. When you choose your NSX-T solution versioning it is important to consider the hypervisor and vSphere compatibility matrix.

For this, one of the key resources certainly help is the interoperability matrix URL and also the compatibility guide. Also to note, it is not just the NSX-T that can have KVM as a transport node; it can host on KVM. However, mixed deployments of managers on both ESXi and KVM are not supported. And without a doubt, it is better to host NSX-manager on the ESXi not on KVM for some understandable reasons.

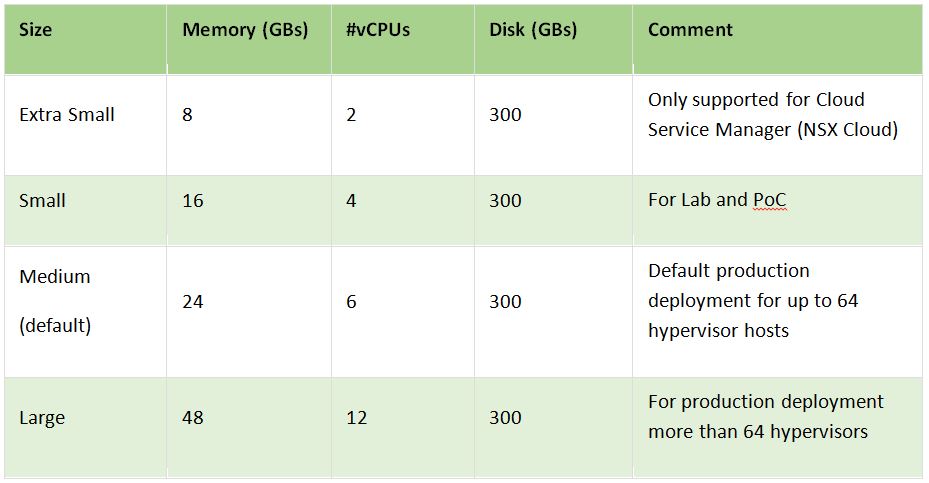

NSX-T Manager Sizing

Obviously, the sizing is the key pre-requisite for allocating the essential resourcing for NSX-T manager. And you should aware VMware only could help you with the software. Furthermore, depends on your sizing choice during the deployment phase the role of your NSX-T manger changes. For example, if you go with extra small you can only use it as a cloud service manager.

NSX-T manager single site design

Now let’s get into the more thought-provoking topics such as HA, FT, and segmentation, and just to control the blood flow we will limit the talk to a single site. With single-site deployment the key design considerations include formulating the high availability, fault tolerance, and compliance requirements.

HA and FT considerations

There could be many factors affecting the HA and FT state, the key topics are the below once

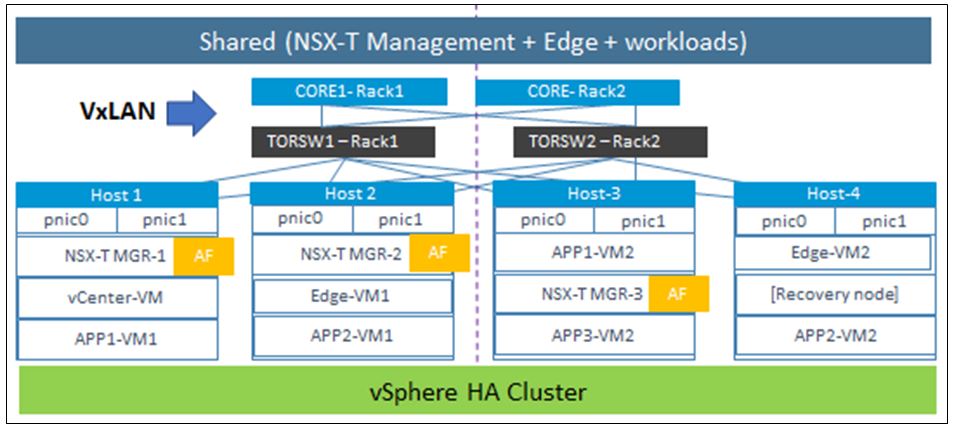

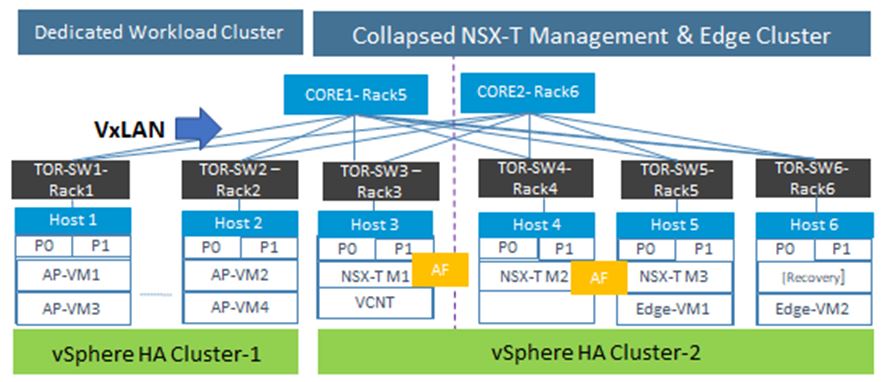

Rack & bare metal host redundancy: The key here is to distribute the NSX-T manager on a hosts which are part of separate racks – this ensure that in case of host or the rack failure scenario the remaining NSX-T manager nodes will take care of the maneuver

VSphere HA with a minimum of 4 nodes: The customary production operating state is a three-node NSX Manager cluster. However, you should add an additional server host as a recovery host part of the HA cluster. The notion here is upon the loss of any hosts where an NSX Manager is running, vSphere recovers the lost NSX Manager on the 4th hosts.

Anti-Affinity: Using Anti-affinity is certainly a best practice. The fact is, it ensures that all three cluster members are located on different hosts/racks.

Use shared storage and subnets: this is something that goes with the VMWare HA. The HA capability could make use of the shared storage in case of any maintenance activity or failure scenarios. And to ensure that any virtual machine can run on any host in the cluster, all hosts must have access to the same virtual machine networks

Use Dual NiC and dual-homing: This key here is to use at least a pair of interfaces with adequate bandwidth available. This could help to avoid any bottleneck during vMotion or one of the interface failures. Surprisingly, it is something that cannot digest easily with typical server gurus as this practice considered to be introducing a more complex troubleshooting scenario. The fact is dual-homing solutions such as Cisco VPC or MLAG is a worthy mechanism as it could help with upstream switch redundancy. And I hope with the new advent of the network engineers into the virtual realm, this gets to sort out soon

Compliance considerations:

The compliance requirements always depend on organizations. However, if you just consider the design practices, it is always best to keep the management and data traffic separate.

Let’s dig into some of the key aspects on these topics below.

Use separate PNIC: For management traffic, it is always better to use dedicated interfaces that are not part of the data traffic. The management traffic could include VMK interfaces used for traffic types such as management vMotion, vSAN, NFS, etc.

Use separate virtual switches: It is important to make sure the management traffic is not disturbed during any fat fingering or disruptive acts. For this purpose, many deployments drive with management traffic on VSS, however, for a matter of utilizing better capability, VMware kind of encourage going with DVS/N-VDS.

Topology considerations:

Now let’s juice all the ingredients together and think about how those above considerations get to develop into a topology form. And for sure this gets us into both shared and dedicated cluster alternatives

Shared cluster: Shared cluster is ideal if your setup is small – let’s say no more than 4 physical servers. And you don’t have any compliance policy to segregate management and data being on the same physical servers. The functions could part of the shared vSphere HA cluster cloud include but not limited to the NSX- Manager, vCenter server, Edge VMs, and workload VMs, etc

Dedicated Cluster: In this scenario, the workload clusters are completely separate from the management cluster. The management cluster will host all the management related functions such as NSX-T Manager, Edge functions, vCenter and VRNI, etc and the workload cluster will have all the business application servers hosted. This safeguard the fact that infrastructure and application teams own and operate their own server builds

Summary and Next Steps

Indeed, I tried to shorten the writing to make sure it is not taking too much of your time. And will articulate more on this topology discussed scenarios part of my boot-camp video series. Furthermore, I am planning to dedicate my next blog for NSX-T specific multiple site consideration. Until then for more reading on NSX-T related topic please refer our VMware section

Thanks for sharing, great content, and presentation – easy to understand and learn

Thank you Zane – Happy learning